Research

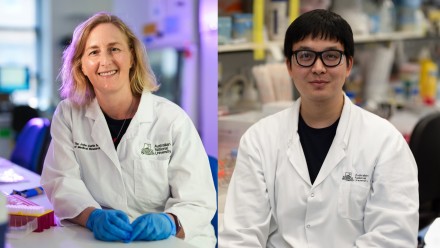

Core research areas for The John Curtin School of Medical Research include immunology, cancer, genomics, neuroscience, infectious diseases and metabolic disorders.

Our research aims to understand and provide novel insights into diseases including cancer, diabetes and rheumatoid arthritis, and conditions such as epilepsy and vision impairment, amongst others. Research at JCSMR is organised within four departments, and each department is comprised of independent groups and laboratories.

As an integral part of the ANU College of Health and Medicine, we are committed to cross-disciplinary research that will provide solutions to health problems which beset our community. In addition, we continue to pride ourselves on our commitment to the training and mentoring of the young medical researchers of the future.